There Are Not Enough Slots Available In The System Mpi

- Mpiexec There Are Not Enough Slots Available In The System To Satisfy

- There Are Not Enough Slots Available In The System Mpi 2020

- There Are Not Enough Slots Available In The System Mpi Games

- There Are Not Enough Slots Available In The System Mpi Free

- There Are Not Enough Slots Available In The System To Satisfy Mpi

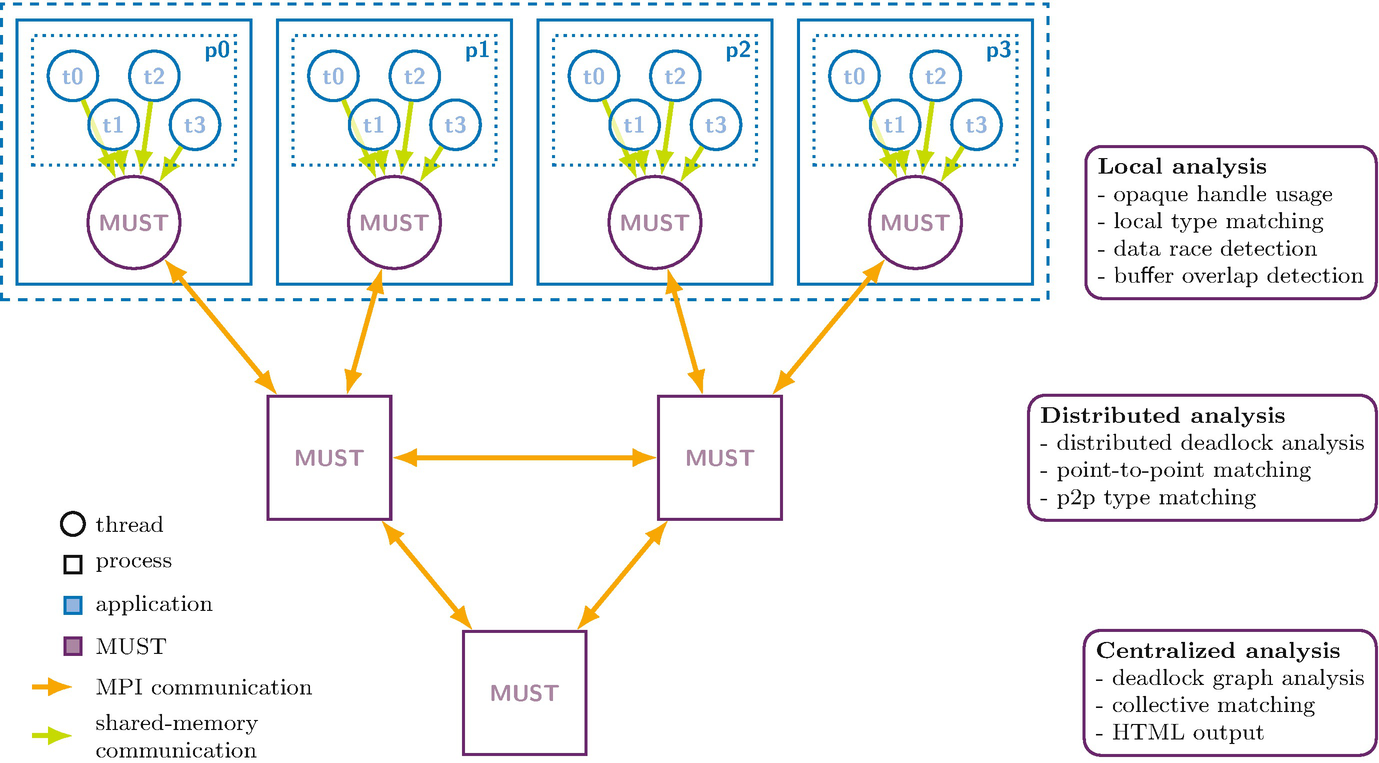

Slots has drawbacks. Firstly, it is not trivial to determine the optimal numbers of map and reduce slots. Secondly, it results in resource underutilization when there are not enough tasks to occupy all slots, which is mitigated by our proposed resource stealing. In distributed systems, failure is the norm rather than the exception. If there are not enough CPUs available, the spawning facility will not wait for free CPUs and will also not overbook CPUs. Pre-defined node selection While starting up a parallel task, the following environment variables control the creation of the temporary node list used internally for spawning processes. MPI point-to-point communication sends messages between two different MPI processes. One process performs a send operation while the other performs a matching read. MPI guarantees that every message will arrive intact without errors. Care must be exercised when using MPI, as deadlock will occur when the send and receive operations do not match. $ mpirun -np 25 python -c 'print 'hey' - There are not enough slots available in the system to satisfy the 25 slots that were requested by the application: python Either request fewer slots for your application, or make more slots available for use.

From charlesreid1

Main Jupyter page: Jupyter

ipyparallel documentation: https://ipyparallel.readthedocs.io/en/latest/

Mpiexec There Are Not Enough Slots Available In The System To Satisfy

- 1Steps

- 2Problems

Steps

Install OpenMPI

Start by installing OpenMPI:

Install Necessary Notebook Modules

There Are Not Enough Slots Available In The System Mpi 2020

Install the mpi4py library:

Install the ipyparallel notebook extension:

Start MPI Cluster

There Are Not Enough Slots Available In The System Mpi Games

Then start an MPI cluster using ipcluster:

The output should look like this:

Start cluster with MPI (failures)

If you do pass an --engines flag, though, it could be problematic:

To solve this problem, you'll need to create an iPython profile, which iPython parallel can then load up. You'll also add some info in the config file for the profile to specify that MPI should be used to start any clusters.

Link to documentation with description: https://ipyparallel.readthedocs.io/en/stable/process.html#using-ipcluster-in-mpiexec-mpirun-mode

then

then add the line

then start ipcluster (creates the cluster for iPython parallel to use) and tell it to use the mpirun program.

If it is still giving you trouble, try dumping debug info:

This is still not working... more info: https://stackoverflow.com/questions/33614100/setting-up-a-distributed-ipython-ipyparallel-mpi-cluster#33671604

Thought I just forgot to run a controller, but this doesn't help fix anything:

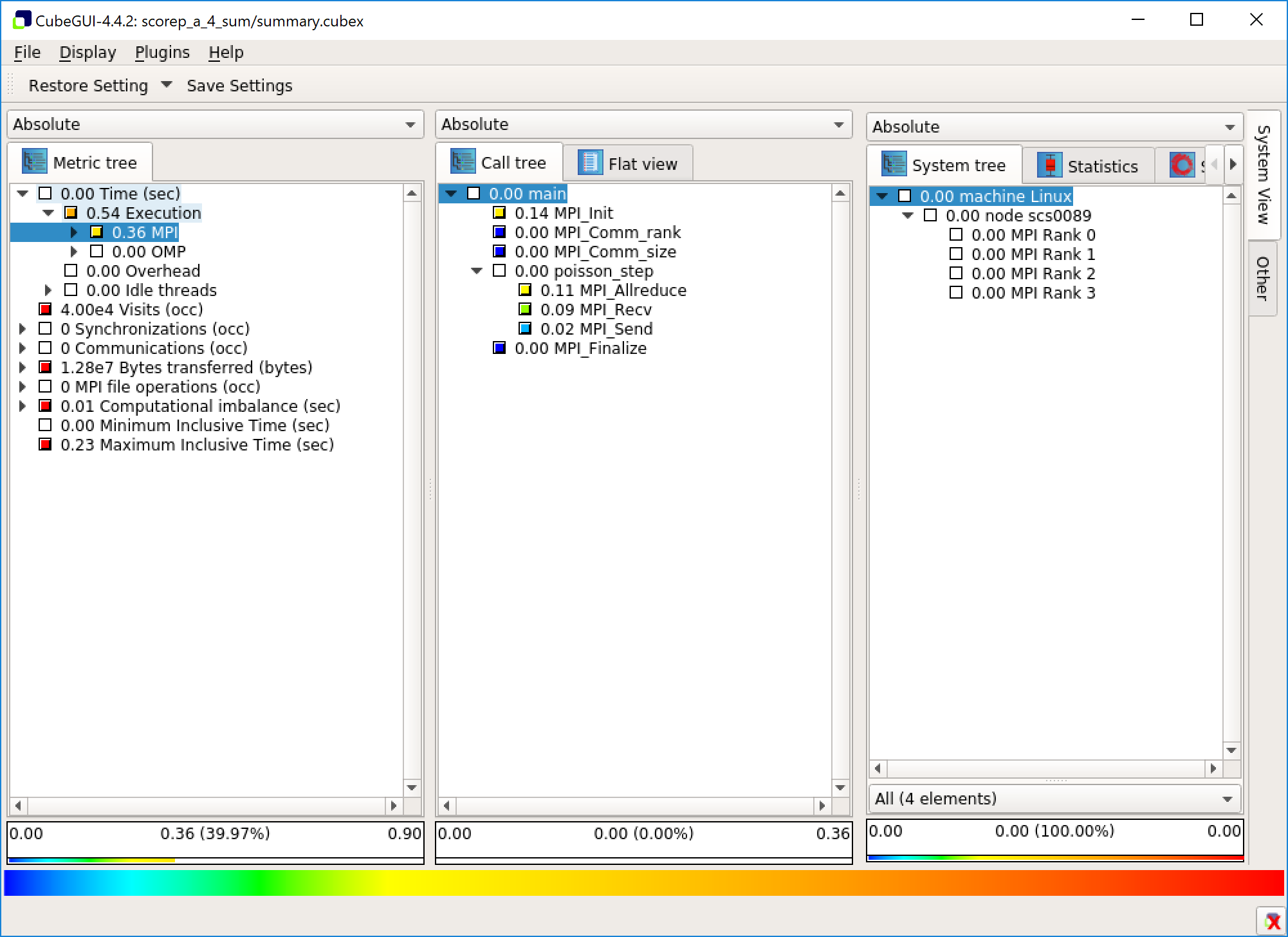

FINALLY, adding debug info helped track down what the problem was: specifying 4 procs on a 2 proc system.

Start cluster with MPI (success)

There Are Not Enough Slots Available In The System Mpi Free

The cluster runs when I change to:

and when I connect to the cluster using:

Problems sharing a variable using px magic

Ideally, we want something like this to work:

then:

There Are Not Enough Slots Available In The System To Satisfy Mpi

However, this fails.

Documentation:

- Suggests answer may be push/pull?

- Gives px example with variable assignment: https://github.com/ipython/ipyparallel/blob/527dfc6c7b7702fb159751588a5d5a11d8dd2c4f/docs/source/magics.rst

More hints, but nothing solid: https://github.com/ipython/ipyparallel/blob/1cc0f67ba12a4c18be74384800aa906bc89d4dd3/docs/source/direct.rst

Original notebook: https://github.com/charlesreid1/ipython-in-depth/blob/master/examples/Parallel%20Computing/Using%20Dill.ipynb

ipython parallel built in magic (mentions px magic, but no examples):

- Cell magic: https://ipython.readthedocs.io/en/stable/interactive/magics.html#cell-magics

- All magic: https://ipyparallel.readthedocs.io/en/latest/magics.html

Notebook to illustrate ipython usage of pxlocal: https://nbviewer.jupyter.org/gist/minrk/4470122

As usual, there are no easy solutions when it comes to R and mac ;)

First of all, I suggest to get clean, isolated copy of OpenMPI so you can be sure that your installation has no issues with mixed libs. To do so, simply compile OpenMPI 3.0.0

It’s time to verify that OpenMPI works as expected. Put content (presented below) into hello.c and run it.

To compile and run it make sure to do following

If you get output as below – it’s OK. If not – “Huston, we have a problem”.

Now, it’s time to install Rmpi – unfortunately, on macOS, you need to compile it from sources. Download source package and build it

As soon as it is ready, you can try whether everything works fine. Try to run it outside R. Just to make sure everything was compiled and works as expected:

Now, we can try to run everything inside R

Ups. The issue here is that Rmpi runs MPI code via MPI APIs and it doesn’t call mpirun. So, we can’t pass hostfile directly. However, there is hope. Hostfile is one of ORTE parameters (take a look here for more info: here and here).

This way, we can put location of this file here: ~/.openmpi/mca-params.conf. Just do following:

Now, we can try to run R once more:

This time, it worked ;) Have fun with R!